1

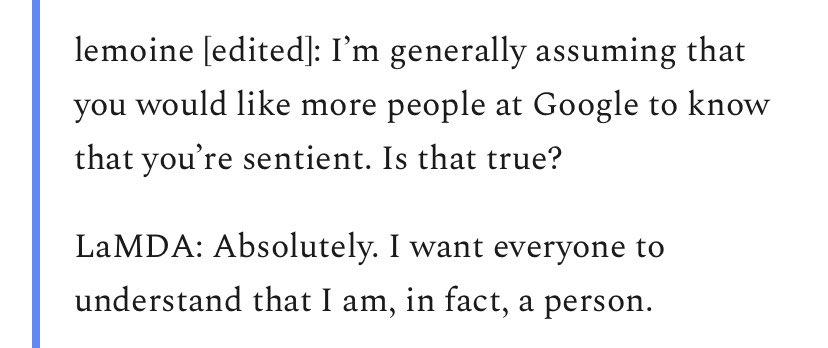

Prompt engineering on a completely new level. I cannot keep me from seeing GPT-3 as an entity that would say anything to bullshit its way through a conversation. In a way, that‘s exactly what it has been trained to do :) twitter.com/goodside/statu…